Using Queues and Jobs to postpone Tasks in Laravel

In this post we are going to learn about the use of Queues and Jobs in Laravel (Queues and Jobs) to be able to perform jobs in the background.

Laravel allows you to be able to make all kinds of systems from simple to complex, many times these tasks involve a high computation, and it is one of the main disadvantages that we have in web applications with respect to desktop applications, and that is that applications web, usually (the traditional ones at least) have fewer computing resources available, or are more limited and this is something that we see in doing simple operations such as sending an email or processing an image, than when using the main server thread (the same that we use when making requests to the server) we see that the server takes a few seconds to return a response and it is this additional time that is used to complete these tasks. Additionally, if the operation to be performed exceeds the computing capacity or many people perform operations of this type at the same time, the server may give a time exhaustion error.

From what was mentioned in the previous paragraph, we must use a mechanism to be able to do all these processes efficiently, and the way we do this is by delegating these operations, instead of making them the main thread, we can. Send these tasks to a process (or several depending on how we configure it) which is responsible for processing tasks consecutively one after the other and with this, we already introduce the definition of queues and jobs.

Jobs are these expensive operations at the computational level, sending an email, processing an image, processing an Excel file, generating a PDF, etc, which are assigned or sent to a queue or queues (depending on how we configure it) and they process efficiently, that is, we can enable a certain number of secondary processes that are managed by the queues to process these tasks. Therefore, with this system, it does not matter how many jobs exist at the same time, they will be processed from time to time xxx little without saturating the system. Additionally, it is possible to specify job priorities so that they are executed before others.

In short, by using the system of queues and workers, the response capacity is improved, it is possible to horizontally increase the number of workers available to process these jobs, it is possible to re-execute failed jobs, thereby giving better tolerance to system failures, all this asynchronously without affecting the main thread, so with the importance of this system clarified, let's learn how to implement it.

Queue controller

First, we must choose a strain controller to use among all the existing ones:

- 'sync': The 'sync' handler executes the queued jobs right after and in the same request-response cycle. It is suitable for development and testing environments, but not for production.

- 'database: The 'database' driver stores queued jobs in a database table in a separate queue worker process.

- 'redis' - The 'redis' driver uses Redis as a queue store.

- 'beanstalkd': The 'beanstalkd' driver uses the Beanstalkd queue service to process queues.

- 'sqs' (Amazon Simple Queue Service): The 'sqs' driver is used for integration with Amazon SQS.

- 'rabbitmq' - The 'rabbitmq' driver allows RabbitMQ to be used as a queue driver.

- 'null' - The null handler is used to disable the queuing system completely.

By default, the database is configured:

config\queue.php

'default' => env('QUEUE_CONNECTION', 'database')And to carry out the following tests, you can use the database although, usually Redis is an excellent option because it is a fast and efficient database that we installed previously and is the one we will use:

config\queue.php

'default' => env('QUEUE_CONNECTION', 'redis')Finally, we start the process to execute the jobs by:

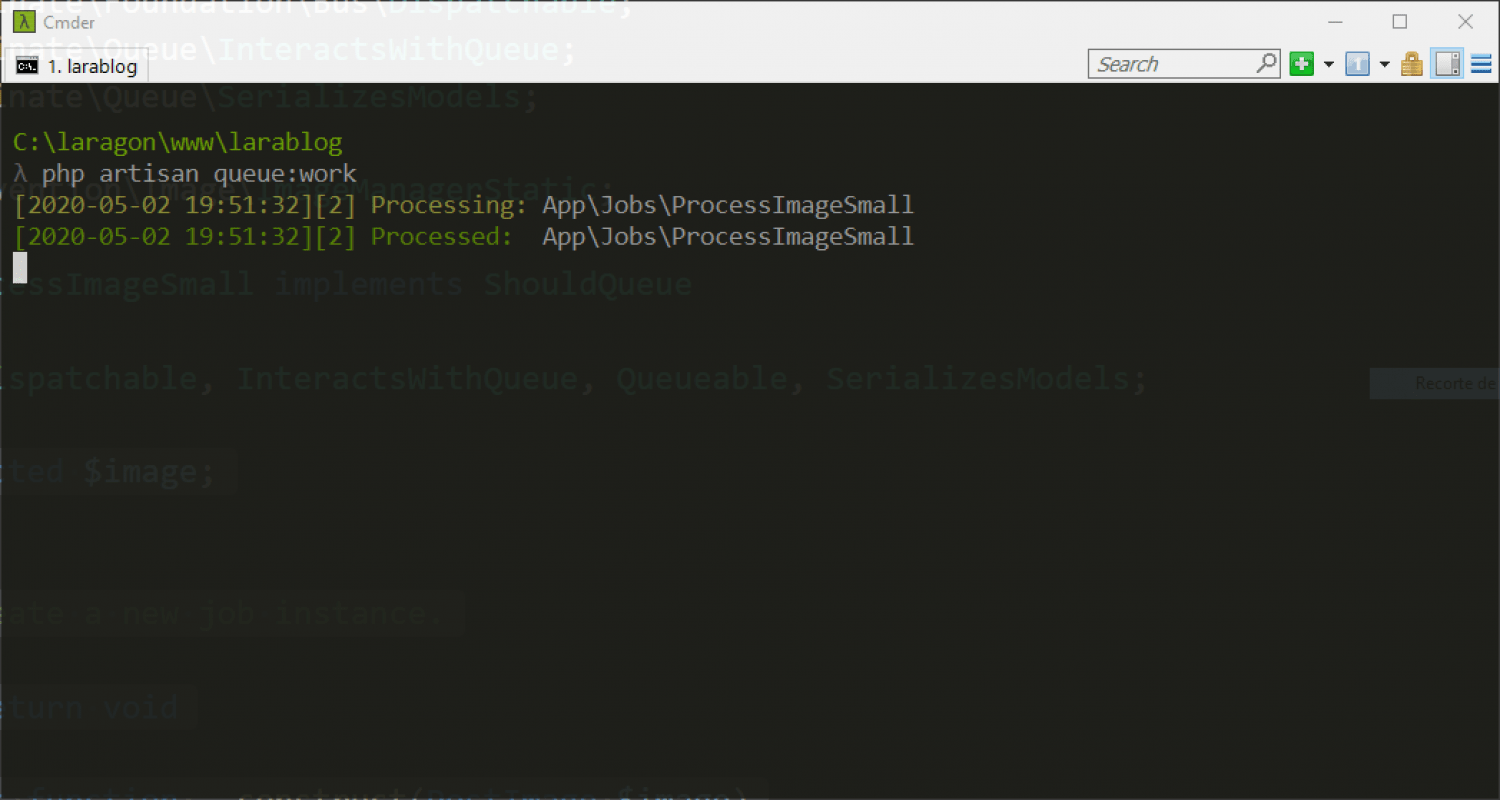

$ php artisan queue:workAnd we will see through the console:

INFO Processing jobs from the [default] queue. Every time a job is processed, you will see in the terminal:

.................................................................... 3s DONE

2024-07-12 09:44:31 App\Jobs\TestJob ............................................................................... RUNNING

2024-07-12 09:44:34 App\Jobs\TestJob ............................................................................... 3s DONE

2024-07-12 09:45:43 App\Jobs\TestJob ............................................................................... RUNNINGIt doesn't matter if you dispatch jobs from your controllers or similar and the queue is NOT active, Laravel registers them and when you activate the queue process, it dispatches them; and this is all, with this Laravel sets up a process to manage the jobs, we need to create the job that we will see in the next section.

In this post we are going to learn about the use of Queues and Jobs in Laravel (Queues and Jobs) to be able to perform jobs in the background.

The idea of this API is that we can postpone tasks (leave them in the background) that require a high computational consumption and all this is to improve the user experience and avoid application crashes; in such a way that these high computing tasks will be executed in another thread or process in our server and will be resolved as they arrive or depending on their priority.

Basically all this process is carried out between the tasks/jobs (the high computational processes) and the queues (mechanism to register the jobs).

In the same way, we are going to talk a little more in detail about these two fundamental components.

The jobs and the queues

Jobs are the heavy or processing-intensive task that we want to defer; and they can be of any type such as massive or simple sending of emails, processing images, videos, etc; therefore these are tasks that we register as pending so that Laravel processes them one by one as it becomes available; so the next thing you can ask yourself is, how is this organization managed?; that is, who is in charge of managing the priority between tasks or jobs (queues) and how do we maintain an organization of all this.

The jobs

In Laravel, jobs are an important part of the framework's job queue functionality, that is, jobs are used to process the heavy jobs stored by “queues”; these heavy tasks can be anything like sending emails, processing images, videos, among others; this is ideal since these tasks are not part of the user's main session and it avoids consuming resources from the user's main session, apart from avoiding the famous "not responding" windows and in general, you have a better experience in using the app as it does not affect the performance or responsiveness of the main app.

Jobs in Laravel can be created to perform whatever task is needed in the back-end without the user having to wait for the task to complete; that is, how to send an email or export data in an excel or a similar format.

The great thing about jobs is that they are executed sequentially, suppose you create a job to process emails, the emails are sent one by one without the need to overload the page, which would not happen if the email delivery was handled from the user's main session and suddenly, 5 or more users come to send emails from the application, therefore, at the same moment of time, you would have multiple emails and spend the resources that this requires.

In short, jobs in Laravel are an essential part of the framework's job queue, allowing heavy or non-essential tasks to be performed in the background to improve the performance and responsiveness of the main application.

The queues

Already at this point, we have introduced the concept of "queues" in the use of jobs, in the same way, we are going to explain it quickly; the job queues correspond to the operation that you want to perform, which, as we mentioned before, corresponds to the "heavy" operation that you want to perform, that is, instead of sending an email from a controller, it is stored in a queue that is then It is processed by another process.

Create your own queues in Laravel to maintain order

The answer to what was commented above is quite simple, it would be the queues as a mechanism that allows registering or adding these works or jobs; we can register or have as many queues as we want and we can specify to Laravel via artisan which queues we want to process first; for example, let's look at the following example:

php artisan queue:work --queue=high,defaultThrough the previous command we are telling Laravel to first process the jobs from a queue called "hight" and then from a queue called "default", which by the way, is the queue that we have defined by default.

Connections for the structure to maintain the tails

The connections are the default way we have to indicate how the entire process of the queues is going to be carried out (since it remembers that the queue absorbs the task, therefore, at this point, once the task or job is registered, in a queue, in our queue the task no longer paints anything); but to be able to use all this structure we must indicate the connection and with it we must indicate:

- The controller or driver

- Default or Configuration Values

Therefore, through the connections we can specify the driver that we are going to use, which can be any such as the database or other services; for example:

Database:

- Amazon SQS: aws/aws-sdk-php ~3.0

- Beanstalkd: pda/pheanstalk ~4.0

- Redis: predis/predis ~1.0 or phpredis PHP extension

And some extra parameters for configuration.

Configure the Driver or connector of the queues for the database

Now we are going to open the config/queue.php file and the .env, in which we are going to look for a configuration called QUEUE_CONNECTION

Which by default is called QUEUE_CONNECTION and has the sync configuration, which if you analyze it a bit you will see that we have several types of connections that we can use, including the database call that is the one we are going to use, therefore configure it at least in the .env file; in my case I'm going to leave it like this in the .env:

QUEUE_CONNECTION=database

Y en el config/queue.php quede:

'default' => env('QUEUE_CONNECTION', 'database'),Create the jobs table in our database

Now the next thing you can ask would be, how can we configure the table in our database?; following the official documentation:

php artisan queue:table php artisan migrateWe already have an artisan command that helps us in this whole process, which would be the one that allows us to generate the migration to create the table; and with this we are ready.

Creating our first Job in Laravel

Now we are going to create our first Job that we are going to configure with the default queue; for that, we are going to create one using our artisan:

php artisan make:job ProcessImageSmallAnd with this we have a file generated in App\Jobs

Which consists of two main blocks:

- __construct

- handle

The constructor function to initialize the basic data that we are going to use and the handle function to do the heavy processing; It's that simple, therefore in the handle function we can send emails, process videos or images, for our example we are going to assume that we have an image that we are receiving by parameters:

protected $image;

/**

* Create a new job instance.

*

* @return void

*/

public function __construct(PostImage $image)

{

$this->image = $image;

}This image is basically an instance of a model that looks like this:

class PostImage extends Model

{

protected $fillable = ['post_id', 'image'];

public function post(){

return $this->belongsTo(Post::class);

}

public function getImageUrl(){

return URL::asset('images/'.$this->image);

//return Storage::url($this->image);

}

}And now, we are going to do a heavy process like scaling an image; for this, we are going to use the following package that we can install using composer:

composer require intervention/imageThe code that we are going to use is basically self-explanatory:

<?php

namespace App\Jobs;

use App\PostImage;

use Illuminate\Bus\Queueable;

use Illuminate\Contracts\Queue\ShouldQueue;

use Illuminate\Foundation\Bus\Dispatchable;

use Illuminate\Queue\InteractsWithQueue;

use Illuminate\Queue\SerializesModels;

use Intervention\Image\ImageManagerStatic;

class ProcessImageSmall implements ShouldQueue

{

use Dispatchable, InteractsWithQueue, Queueable, SerializesModels;

protected $image;

/**

* Create a new job instance.

*

* @return void

*/

public function __construct(PostImage $image)

{

$this->image = $image;

}

/**

* Execute the job.

*

* @return void

*/

public function handle()

{

$image = $this->image;

$fullPath = public_path('images' . DIRECTORY_SEPARATOR . $image->image);

$fullPathSmall = public_path('images' . DIRECTORY_SEPARATOR . 'small' . DIRECTORY_SEPARATOR . $image->image);

$img = ImageManagerStatic::make($fullPath)->resize(300,200);

$img->save($fullPathSmall);

}

}

In which we simply create an instance of our package, which we installed earlier, specify the location of the image and using the resize function scale the image to the specified dimensions; then we save the new image and with this we are ready.

Dispatch a job

The next thing we have to specify is where we are going to call this job, which can be anywhere in our application, such as when we load an image, etc; suppose we have a method to load an image in Laravel for example:

public function image(Request $request, Post $post)

{

$request->validate([

'image' => 'required|mimes:jpeg,bmp,png|max:10240', //10Mb

]);

$filename = time() . "." . $request->image->extension();

$request->image->move(public_path('images'), $filename);

//$path = $request->image->store('public/images');

$image = PostImage::create(['image' => /*$path*/ $filename, 'post_id' => $post->id]);

ProcessImageSmall::dispatch($image);

return back()->with('status', 'Imagen cargada con exito');

}From here; If you look closely at the code, you'll see that we define an instance of our queue with the dispatch method to dispatch the job ProcessImageSmall::dispatch($image)

Raise daemon to process queues

Now if we review the previous table, we will see that a job was registered, but it has not been dispatched, to dispatch it, we have to activate the daemon that is in charge of doing this job, start the process that is in charge of doing the jobs pending in the jobs table:

php artisan queue:workAnd with this we will see that we have everything ready and the job has already been dispatched:

With this, we learned how to use jobs and queues in Laravel; remember that this is one of the many topics that we deal with in depth in our Laravel course that you can take on this platform in the courses section.

Video Transcript

Now we are going to process the queue as I told you important that you can have your superview with View mixed with read mixed with a button that sends a rocket to the moon You can do anything what I mean is that again this is independent of the normal flow of the application so for now I think I have reloaded a couple of times which was what I did the previous class let's see what happens here the first thing we have to do is open the log file to have it controlled here. I would ask you that here appears the time in which it is taking to load the page if it does it quickly as we are locally the process is going to be very fast, for this job, I am going to put about 3 seconds that it stops by means of a sleep that simulates sending an email or anything and then it ends here the operation So here we are going to start to dispatch the queues also pending because it is going to process the queues well the jobs:

<?php

class Test implements ShouldQueue

{

***

public function handle()

{

sleep(3);

Log::info('Test.');

sleep(3);

}

}When running the command:

$ php artisan queue:workIf you have some jobs backlogged, they will be processed automatically.

Here you can see that they are being processed, that is, again those jobs that we did before were not lost even though the queue was not active, therefore this gives strength to our project, to our application in general, since again they will be lost and in case there is a problem, remember that well, I will tell you later, but we also have a tried command there:

$ php artisan queue:work --tried=3In which we simply indicate the times in which it will try to do the operation again in case anything has happened. At the time of executing that task a problem occurred. So that's basically it.

If the job returns an exception, it is a failed job.

Well if we burst here in which the server indicates that it could not process the request in a certain time or something in development then imagine as it were in production that we are going to have a more controlled environment or with fewer resources but here you can see how it works then imagine again that this is precisely sending an email an operation that takes several seconds per client and several clients connect at the same time obviously the application or the server is going to have been exceeded based on the computing power and memory and in that and at that point it would give a section And of course the work or progress that the client is making would be lost and with this Also apart from all the time that the client is here waiting for the operation to finish obviously that would not be a recommended environment but if we do it here using this scheme it will simply process a task one by one just as I saw And that is also a very important point if we come here and I am going to do this to simulate several clients here suppose that three clients come How can I simulate three clients here well I can reload this three times therefore we are going to have three jobs that again You can simulate as if they were three separate clients, so I come here and recharge here three times. Well, I recharged like four times and you will see that he will do the action one by one, therefore he will not get saturated, and this was the example I was giving you, it is very different.

Jobs vs processing the task on the main thread

If you are going to transport 20 boxes, suppose that they are 20 boxes for a laptop, that is to say, they are a more or less large box, it is very different. If they give you those 20 boxes at once that you will not be able to handle because there are too many boxes to carry in your hands, it is very different than them giving you one box at a time and you are going to manipulate it and place it in the final place. So this is a good example of this and therefore here it is not like who colloquially says the server would fall, it would not have a memory leak or whatever the error is called and therefore it can process a job little by little and not in a parallel manner. And that is another very important point that we have here in the use of queues and jobs, so well, now that this is clear, remember that if you still have any doubts you can go watch the video of me drawing boxes or the presentation video Because it is important that you understand How everything works, which in short, the Quiz are simply a queue, that is to say, it is the command that we executed before and therefore otherwise all that everything else is the work that is the heavy process and that we call it wherever we want depending on the logic of our application and depending on what the work does again if it were to send an email to a registered user you would have to come here to the user controller Look here for the register one well sorry register I didn't leave out the Store one Well that simulating the process a little and right here then It wouldn't even be here because I think we don't have it exposed user controller no we don't have it exposed But well suppose that this was the one for the end user you had to come here and just once the user is created here you would place your job register dispatch you would pass your user and well the content well and everything else the content It depends on how you handle it Because if it were a single job to dispatch all the emails then here you would have to pass the content or if it is specific for registered users then it would simply be the user But that would be a simulation of this we do it in the next class So let's go.

We are going to talk about a topic that is also very important, which would be the kes in jot or queues and jobs in Spanish kus is queues and jot is jobs and I say that it is a very important topic for two simple reasons, seeing them from the point of view of the system, one is that with this, with this scheme that we still do not know, it allows us to improve the responses to the client and also the fault tolerance of the system. And what do I mean by this? Because basically the processes that are of a high level of computation, such as sending an email, as it is generating a PDF or reading an Excel file, I am talking about that these files are going to have a large volume of data and therefore the more data or more pages it is going to be more complicated or longer to read them and with this have a greater consumption of computation. That is what I mean, I think it is quite simple to understand. If for example I am going to read this file that can be exported to PDF, it is going to take a longer time. Like this: Simply Laravel reads a five-page PDF, which I am talking about that has 572 pages, I think it is a little obvious. What I'm telling you then this type of process usually if we send it to do it in the classic way in which we do a Dead process as we did we have a controller there that is in charge of reading it and we read line by line all that is done in the main thread and here is one of the disadvantages that we have in this type of schemes in which we use web applications And it is that if it were a desktop application we can already access the team's resources in a better way, that is to say, create a thread or something like that here with php or in web applications in general it is always a little more complicated to do this and that is why there is this type of mechanism that basically allows us to do a separate process it is basically that instead of executing the complicated task or complex processes in the main thread we can do it in a separate thread it is that simple right now I will do you Well at the end of all this I will do you some exercises with some boxes so that it is understood a little better The idea in a slightly more practical way And from the next class we start with this but well why does it have this double denomination of dice cues in jot Basically the jot is a class here I'm going to show you one like a component like a controller like a model it is a class that does something what is that something a task a task that is as open as what we have in the controllers but of course this task is a little more closed it is not going to return a view or well if it can return it in case the complicated process in the end results in a view But what I mean is not obliged to return r something simply do an operation a complicated operation again process an image or in general terms process a file understand either generate it or consume it that is to say read it and then return something or do some operation in the database if necessary for this simple example Note that the only thing this task does is hang for 3 seconds and this will easily allow us to understand how this works since if you do this slip function in the main thread what you are going to do is that it blocks for 3 seconds that is to say that the page appears loading for 3 seconds in the main thread if you do this again either in the controller component or route but if we do it in the job This is going to be a separate and simple thread simply the thread the secondary thread is going to sleep for 3 seconds So this is the part of the job over here we can send an email we can read an image well read a PDF or an Excel whatever or generate a PDF or generate an image we can do all that right here because it is completely free and as you can imagine we have access to the entire API of arabel and its packages that we want to import then we already have the work but there is an extremely important point missing like hell let's see this and that is where the queues come in which is already a process that we do not really implement but simply use it Which is obviously very customizable since I always give you an example that it is simply a thread, that is to say it is simply a secondary process but it does not have to be that way you can create two you can create three etcetera you can create as many as you require or that your equipment supports Of course then that is the part of the queues which process these jobs and that is basically that I already think that when we go a little into practice this can be understood in a better way for now I do not want to go into too much depth or entangle you more But remember that you can basically pause this class and start reading this so that you also understand it in a slightly more theoretical way I will tell you a little the most practical way and here I leave you the most theoretical part then like everything in life We also have drivers or controllers which are the following I do not want to extend myself too much either since this for example the s executes it asynchronously just after finishing the answer well obviously this using the main thread does not serve us much then it is more than anything suitable for testing and development environments because again we are not gaining much since it continues working in the main thread we want this process to be asynchronous, that is to say, send the task to a secondary thread and that when this finishes well it simply finished and the main thread can continue in its normal flow We also have the database, well as we have always seen it is not the best scheme because in the end we are saturating another process the redis one we already know is a database in the same way I will introduce it to you quickly In case you have skipped some previous videos networks is a database a key value type database the main characteristic is that it is extremely fast and is usually used for this type of operations either for caching as we used it before or in this case precisely to do these processes and it is the one that we are also going to use in this in this block we are going to use either the database because again It is to do an experiment with this or the redis which driver do you use as it happens with other previous operations like the cache are completely transparent as far as the implementation is concerned so don't worry so much about this and what we have here are simply other programs that we can use I'm not going to go into much detail since well you can consult their documentation you simply put this name and there you can get more information but obviously it is a program that has to be installed just like we have networks installed and we reference it and that's the end of the matter and nul means that you are going to disable the system queue process is And that is practically everything so again I know it is a bit abstract especially because of the subject that now there are two things and not just one as we are usually used to handling and well we are going to leave it a little bit there the theoretical part So how do we configure it as you can suppose we already have a file called queue, in this case which is to handle the queues.

Note that it is just queue, that is, it is not called job; just like we have with the cache just like we have with the email just like we have with the login just like we have with the database it has a very similar order simply here the environment variable Which is the one we are using by default and by default we are using database that would work correctly for you This you can use without any problem I in a demonstrative way notice that I am working on this because this project is the one I have linked with long if not I would already use the general one but there I have is laravel herds and we already know that the free version of herds does not have access to the database then I would have to install it in parallel or see how I enable only here redis:

That I already have it enabled Although it does not appear here How strange but well I already have it enabled here and well I can now test it here quickly So it is precisely to make that demonstration But you can use the project that you want again if you do not have networks installed you can use the one from the database since for the purposes of this block it is Exactly the same since it is simply a practice if you are on a Mac you can use the from dbngin there is the redis option and here I would recommend you long Although you enable the redis here as I had told you in the previous video but I decided to separate it we are going to make some boxes here so that it is understood in a more practical way all this and then in the next class we are going to the implementation the first implementation eh again I do it because it is a subject like double queues and jobs then I know that it understands the confusion even at the beginning a few years ago when I also saw this I did not fully understand the why of everything and that is why I want to make this video a little more illustrative of practice also remember that they are videos maybe if you still do not understand it from this that is fine that happens go first to the practice that is to say watch the following video and then if anything you can return both to the presentation and this video so that you understand a little in a more well more comfortable way but again everything is learned with practice and well watching here a little of the reinforcement videos So clarified this let's go.

Obviously the process of the main thread when one day I answer that is the one we have here I am going to call it here main and obviously we sometimes have to do some tasks Here we have to do some tasks that are expensive as I told you before process files we can put it in generic terms but again it can be anything for example also connect to an Api which is also a process that takes a certain time for example when we make an online purchase suppose it is like Amazon obviously Amazon that processes multiple I don't know how many put your hundreds of purchases per minute obviously it is not going to hang on all those purchases it is going to place in the main thread the purchase operation which is to communicate with the bank send it the information and obviously make the payment later obviously it is not going to do it in the main thread because it is done that way obviously when it has a high volume of purchases which almost always goes no matter how good the servers are at some point they are going to start to fail because it is when the main thread of the team what Amazon does is simply sending them to a work queue and from there they are processed little by little and this is also why we see that it always takes a few hours or a few minutes, even days, to process the purchase, of course. They will have other types of logic in which they will also do some verification and that is why it can take so long, that is to say, I do not believe that the queue will last three days to be processed, it is also a bit of an internal matter for them, but the important thing is to notice that it is not done in the main thread. Because if it were in the main thread, which is when we make, for example, a purchase with PayPal that surely has several things behind it, but what I mean is that it is a bit more transparent, eh, it would be Expedited, so here we have the main thread and suppose in this example that we want to process images. The good thing is the normal flow, which is to do it directly in the controller and it is understood that it can also be a component, would be that when we want to process an image here, process the image, sorry, really, this here says process image, we do it in the main thread, therefore it follows the basic flow and this takes a certain amount of time, which again depends on what you are processing, so obviously, this is not the best and here is a bit what I was telling you that if multiple people, suppose that this is an open process in which multiple customers can use it and suppose that many customers do it at the same time obviously that's it Here what is going to happen is one of two things or in the best case the operation is going to take longer therefore you will see there the browser processing, that is, loading, loading, loading because it has to process multiple requests in a main thread, in this case also process the images or simply the server looks overlapped, it looks overwhelmed, it looks exhausted that is when the famous request timeout messages appear or something like that. But in the end it simply gives a 500 error and it is because of processing time that it could not do it and that is why basically with the queue and job process we can solve all that since in the end in that example what I am telling you is that there are several clients; Suppose there are 10, the application is trying to process those 10 requests at the same time, but when we do it through queues and jobs it doesn't work like that, it goes one after the other, that is to say, we, those same 10 clients, use the work queues to make the request at the same time. The only thing it's going to do is that well, that work is added to the queue and the process or processes that we enable are going to process them one by one, that is to say, on the one hand here we have a parallel scheme and on the other we have a sequential scheme, that's being the main difference. So it's obviously if they give you, for example, 10 boxes but they give them to you at the same time, obviously you won't be able to load those 10 boxes. But if they give them to you one by one, obviously you will be able to process those 10 boxes. That's a bit what I'm referring to, so here. Well, that's the problem we have. And in the other, instead of doing what I was telling you, I'm going to come down here, we have another scheme in which we have the main thread again, I'm going to call it main, over here we have the task that we want to perform, which is the main thread. it's the one I'm going to call it pi which is to process images for example and instead of placing it here in the main thread which is this channel that I'm placing here This is the main thread all this this flow enclosed in this perfect cloud this is the main thread or the main process instead of placing it here what it does is pass it to a secondary process which is our Queue and what is it that passes it a job a jot which is the final a class that does something But obviously it passes the distance of this which can receive parameters and well everything that we know then this obviously is going to be the part of the quii I'm going to place it Here I'm going to put simply C for queue because I don't want it to appear so ugly I already have Jen and over here this again can be a process Two processes three processes all the processes that you require and that obviously your equipment supports you're not going to put 100,000 processes and the equipment can't support more than two So in this example each little box that we have here is a jot I'm going to place try to put J that's a J please do not return the course is not to draw boxes this is being the jobs but instead of processing it again sequentially again suppose that 10 people make a request as I told you before to process a fairly expensive task instead of doing it in parallel you do it sequentially then Simply add boxes here and they are processed as they receive the tasks and it is that simple and this obviously as I told you is the queue and that is basically again Make the example that you are receiving a box with a computer, a deop or a laptop and they are giving you 10 boxes at the same time well maybe the supports if there are not 10 then peel 20 already 20 you will not be able to grab them at the same time because they will fall If they give you the 20 boxes at the same time but what happens if they give you one by one First they give you one and obviously you place it on the desk you receive the other you place it on the desk obviously yes you will be able to handle that but again if they give you the 20 at the same time to place them on the desktop Surely half of them will fall at least Then that is the difference and you can compare this with the poor serv in which in the main thread it not only has to support the 20 lactos or the 20 processes that you are passing to it but it also has to return a response to the client and here is a little what I was telling you this also in the end improves that I did not comment on in the previous video but it is a little obvious by improving the response capacity and also the fault tolerance, that is to say that the application cannot process the 10 tasks at the same time and therefore fails, this improves both the response of the application because not again the operation is not being done in the main thread and also the fault tolerance, that is to say that of 500 errors and with this the final experience that the user can have in ourstra application that is the most important thing that we have to take into account when making boxes sorry to make our applications So it is basically That I hope that with this with this excellent design eh it has become clear to you anything I give you the image here so what a lie I am not going to leave it I am going to delete it and well that is practically everything Remember that you can see this video later when you implement the example application in the following video so that through my excellent drawings you can have everything a little clearer So let's go now already known a little How the queues and jobs work the next thing we are going to do would be to create a small experiment to see it here in a practical way then well in the end when we create our job right now and we configure it Ne if you dispatch it from somewhere in this case it will be from a route to be an example I would execute the following Command that by default here we can customize then I have some customization parameters at the end of this block you would execute the following Command to start a parallel process remember again that if you are going to upload this production now this type of command you have to use it on a dedicated server it cannot be on a shared server that is to say something that you can launch commands through a terminal then to create the job as you can suppose we have the make option we indicate Shot the name of the job the same as always you can place a folder whatever you want right now we create this job what it is going to do is sleep the thread and here we save something by the log to be able to see that a little more in real time then then somewhere created the class that if not instantiate it somewhere It simply is not being used something like the models For example you can create your model class but if you do not use it eh the file remains there useless then somewhere a controller something that is consumed by the client we dispatch the job it dispatches it again to the queue that we already started or well we are going to start at that point that is a separate process and that would be practically everything then here I am also going to bring myself an additional window because here I think that the best thing is that we see in parallel Of course it is a little difficult for me here the recording window because of scaling the windows but in a few moments that we see in parallel How everything is working we will see in a few moments of moment just follow my lead so for this example I'm going to use the database one as I told you you can use any one other than null so as not to disable it but after a while we change to networks like but again we can start with this one without any problem since we are in a test environment again and the engine you use is independent the Driver for us that we are in development So let's start by creating the job we already leave this php artisan make we put jot which is what we are going to create and we are going to call it as test job and we hit enter and wait here I don't know sometimes really why this takes so long if it's simply creating a file Sometimes it does it quickly and sometimes not depending on the theme But well here we have it Well a little bit obvious I didn't explain the structure to you much but quite understandable this we don't change it are services that are being used In case it is necessary you would change it but I would be a slightly more advanced approach and good for most cases This is fine the constructor is to initialize things, that is to say when you want to pass parameters for example to send an email you would pass the user or the email the body the thing all that would be here create create your attributes and here you define them later we do a small example with this and well the crown hoas would be the handle that is the one that does the operation itself So what are we going to do we place here as I tell you I want to save in the batch for informational purposes Here it is also important that in your batch the one you are using if you define the level that is good lower than this according to the scale that I defined before since we want to save if not here put error or whatever you want but well it is important to register logs because if not we are not doing anything over here we import here we have the package in case you do not remember illuminate Support fate and over there it has to say lot that we have it here and here we have this we use the php slit function very important that what it does is sleep the process or the thread whatever you call it and here you indicate the number of seconds in this case it is 3 seconds eh I clone here and here I am going to put better before and after so that it is better understood Here I put after and we continue this would be our thread again for Fourteenth time here you perform any expensive operation is what we want to send to a job and here we have it defined now you would need to use it somewhere if it were Then you would place it when you are going to use it For example if you want to send an email when the user registers here it would be just good if you had to look for the controller that is in charge of registering the user and at some point just when you register it you would send that email then I am going to go back here to the routes part I am going to create one here Remember that I am using this project because it is the one I have here linked with laragon and I already have redis so here I place:

.env

QUEUE_CONNECTION=redisthe name you want to give it I'm going to implement it right here to simulate Well a controller or whatever you want to process it and over here Well we do anything we return our superview There it is and before this Of course we have to dispatch the work That is to say it is us again who decide when we use the work as if it were an instance you can see it as if it were an instance of some class that you want to create something and well to continue with the logic of your controller or the component that you are using; the import is already typical, it is simply the location of the file I think it did not even place it to see Well right now we see there I think it did not care now if we place dispatch:

ProcessImageSmall::dispatch($image);The attributes we will see later for now let's settle with this Okay there we have it but there is one important point missing Although notice before starting that if we come here and Sorry here this works correctly It is not that it will not do anything, that is to say it does its job without any problem even if we do not have the queue started and it is dispatching work it is completely independent of the rest of the functionality of our application Although pending with this because if you do not have it active in the end when you activate it then it will start to process the jobs that you left there previously grouped And it is again for this reason although we will see this more clearly in the next video I think that in order not to lengthen this dem any more we will stop here And this is precisely excellent because in this way since it is again an expensive process it may be that and it is a parallel process it may be that at some point the developer or whoever is managing the server here has forgotten to detect that well precisely the queue Here it is down and is not working but still an important point it does not give an error in the application and point that is also important those jobs that are dispatching here or it is whatever you want that is not doing anything, it will not be lost when the process starts again, it will resume it and dispatch it. So with that we again gain fault tolerance for the system and obviously we make a more robust system as well due to what was discussed before because those jobs that are expensive are not lost either because the system is consuming more memory or processing power at a given time and it cannot give and therefore the application fails and because again those jobs are not going to be lost, they are simply queued by Laravel. So we are going to execute it in the in the next class so as not to lengthen this one too much.

- Andrés Cruz

Develop with Laravel, Django, Flask, CodeIgniter, HTML5, CSS3, MySQL, JavaScript, Vue, Android, iOS, Flutter